Sensors

Contents

Introduction

EA robots like RoboThespian and SociBot have a range of sensors which allow for additional interaction possibilities, from making eye contact with passers by, responding to gestures (ie a wave of the hand), facial expression recognition and reading/responding to QR codes.

Headcam

An RGB webcam mounted within the head ensures that our robots see where they are looking.

The video stream from the camera can be viewed on the Touchscreen, and in the Tritium GUI Sensors and is also used with Telepresence - see exactly what your robot sees and make the robot look at the people you are talking to.

The robot can be supplied setup with image processing software SHORE and VisageFaceTrack which process the headcam video stream.

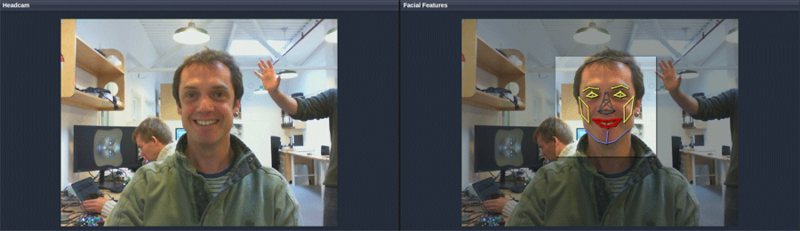

Visage

Visage markerless facial motion capture technology.

With Visage, you can obtain real-time tracking of 3D head pose, gaze direction, and facial feature coordinates, including mouth contour, chin pose, eyebrow contours and eye closure.

Tritium - the robotics software framework used by all Engineered Arts robots, combined with Visage gives you access to Action Unit data, based on the Facial Action Coding System developed by Ekman to describe and categorize facial expressions. EA robots can recognize expressions and emotions, and incorporate this information into their responses and reactions.

Additionally, EA's real-time tracker can connect with your web camera and our InYaFace facial projection system, allowing our Projected Face Robots to mimic and display your facial expression on-the-go.

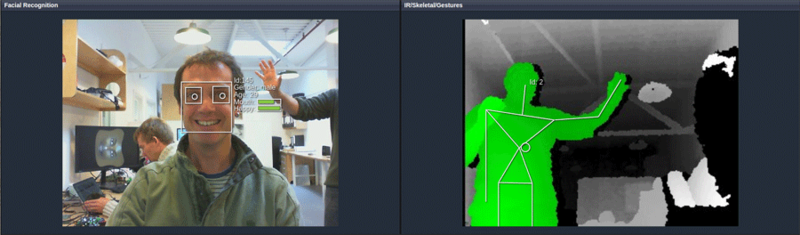

SHORE

In addition to the facial motion capture system Visage, EA robots can integrate SHORE, an image processing software suite developed by Fraunhofer IIS. SHORE is able to find and track faces, accurately estimate gender and provide a good estimate of a persons age. It can also recognise facial features and expressions.

These abilities are used to bring robots to life. SociBot or RoboThespian will make eye contact without prompting, attend to different speakers based on their body language, and will know how to address the person in view based on an age/gender estimate. Expression recognition allows a versatile and customizable audience experience.

Integrated IR Depth Sensor

Using the OpenNI frame work the infra depth sensor compliments the facial data available from the SHORE module.

RoboThespian and SociBot can track the position of more than 12 people at a time, and it rarely confuses one person with another even when they are standing close together or moving in a crowd. It can also detect gestures like hand waves and body poses.

The depth sensor is mounted within the torso of the robots.

UPDATE: due to discontinuation of product by manufacturer, RT4 Models do not include an IR Depth Sensor.

Advanced use / custom applications / additional sensors

All video streams are accessible to advanced users through Tritium - you can apply your own processing to the video streams if desired.

The Tritium Node - Video Capture handles video capture.

Furthermore, advanced users can access the outputs of SHORE, Visage and the depth sensor directly: Tritium Node - Shore Tritium Node - Visage, Tritium Node - OpenNI

As an extensible system further sensors can be integrated into the Tritium framework, Please ask