Robot Management (IOServe)

This page is relevant to RoboThespian models up to and including RT3.6 running IOServe and SociBot V1 models. For more recent Tritium robots please see Tritium GUI. If you are not sure of your robot model please check the Serial Number plate

Contents

Overview

Login to Robot Management. Robot management provides access to one or more of the following features from a single login:

- management of the robot's behaviours through Control Functions using Python scripts

- management of the content that appears on RoboThespian's interface

- viewing of depth sensor and expression recognition sensors

- access to Virtual RoboThespian and live control of real robot using the Virtual RoboThespian interface

- transfer of content from Virtual RoboThespian to the real robot

Access

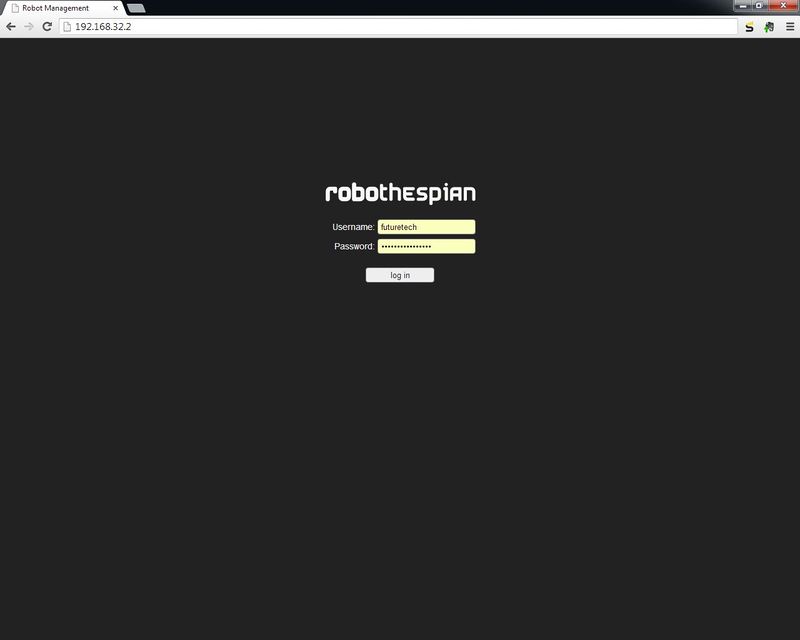

If you connect a PC or laptop to the LAN side of the robot's router, you can connect to Robot Management using the robot's IP http://192.168.32.2/

Username and password as supplied by Engineered Arts. (same username and password for Virtual RoboThespian)

Please note works only in Chrome or Chromium browser

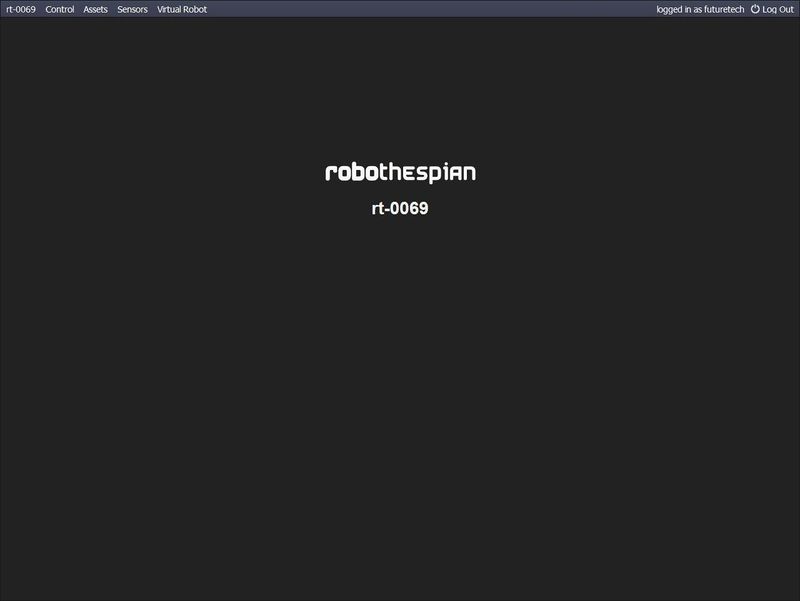

After login you will see from left to right near the top of the screen robot serial numbner (e.g. "rt-0069"), "Control", "Assets", "Sensors", "Virtual Robot"

Virtual RoboThespian

Clicking on "Virtual Robot" allows you to access Virtual Robot via the robot.

The "Control" button near the top allows you to control your robot live from Virtual RoboThespian. Any movements the onscreen robot makes will be made by your robot. Anything you play in Virtual RoboThespian on the timeline will also play live on the real RoboThespian. Press the "Control" button to toggle live control on or off.

For more instructions on Virtual RoboThespian please see - Virtual Robot

Asset Management

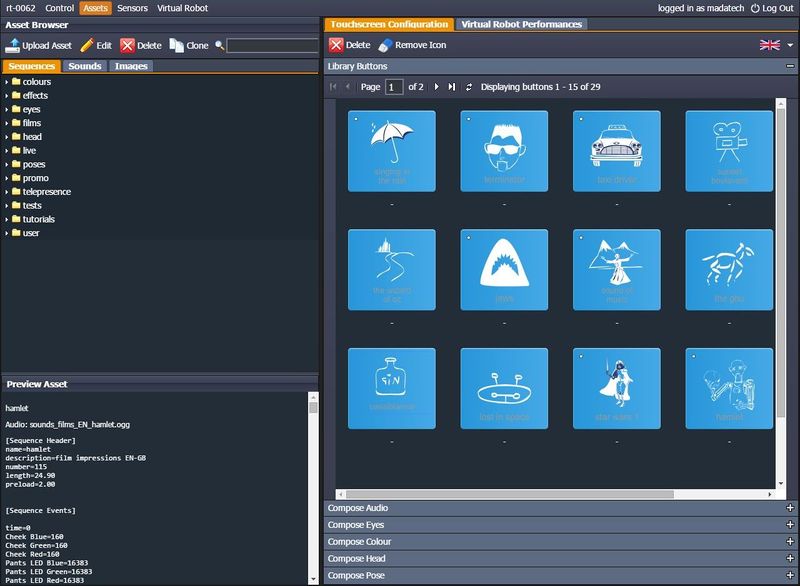

To manage the content on the LIBRARY page of the touchscreen interface and to transfer content from Virtual RoboThespian to your robot, use the "Assets" button

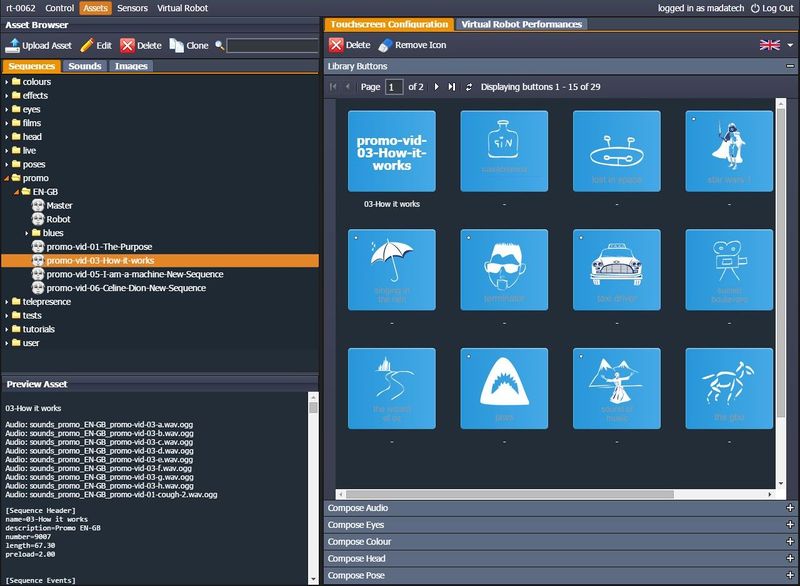

There are 3 main areas to the Assets interface:

Asset Browser, Asset Preview, and Touchscreen Configuration

Asset Browser

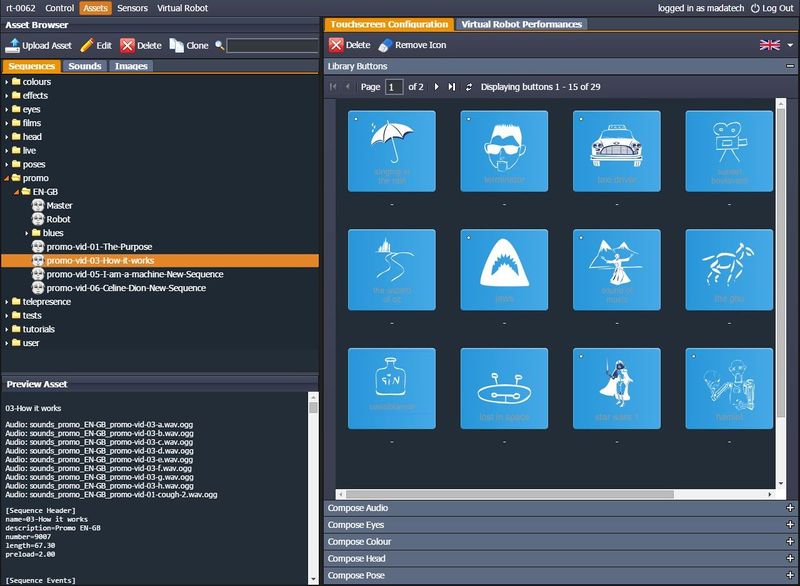

This area allows the user to view a tree of their assets that exist on the robot. These can be displayed by type depending on the currently selected dropdown option.

The toolbar above the tree also allows the user to upload assets as well as rename and delete them.

Assets are sorted into "sequences", "sounds" or "images". Select the type of asset from the drop down menu next to "Asset type:". Changing the asset type in the drop-down menu will change which assets are displayed, and which kind you are permitted to upload.

When uploading a new asset, the bottom left section of the interface will be populated by a type-specific form/menu set.

- Category: select the folder (ie the category) the asset should be associated with. Only categories associated with the type of asset you are trying to upload can be chosen. To change asset type, choose from the dropdown menu at the top of the asset browser.

- Language: you can choose to restrict or specify asset display options depending on the robot's selected language. For adding a general, cross-cultural asset, choose 'All Languages'.

- Name: Enter the asset name. Note that since underscores are used to specify category and subcategories, they should be avoided in the name of any assets you upload. Hyphens are acceptable.

- File: Choose the file to upload by browsing to it using the browse button on the right, or typing the full path into the text field.

Asset Preview

This area of the interface is updated whenever the user highlights an asset in the Asset Browser.

It is context sensitive in that if an image asset type is highlighted then the image is displayed, if audio is highlighted then controls for displaying that audio item are displayed, etc.

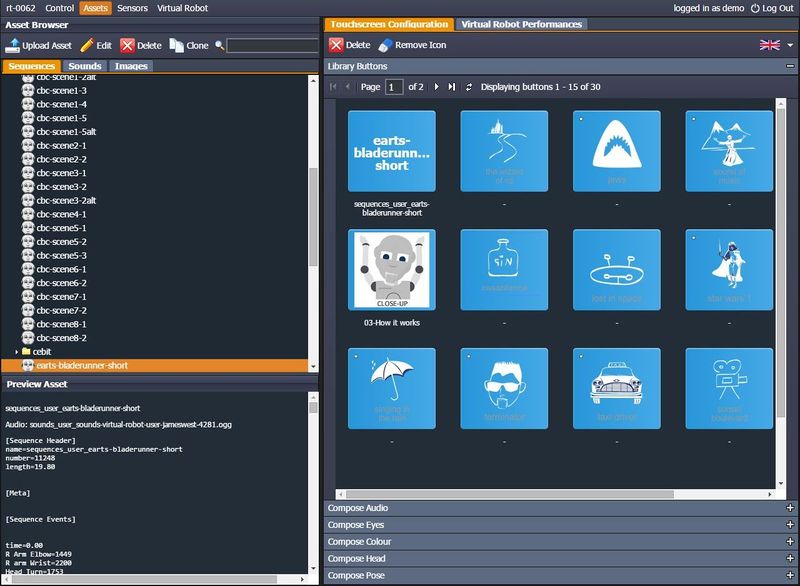

Touchscreen Configuration

On the right are the screens of RoboThespian's interface that you can add content to or edit. These are the "Library" and the Compose screen ("Compose Audio", "Compose Eyes", "Compose Colour", "Compose Head", "Compose Pose")

Sequences are dragged from the Asset Browser into the currently expanded Touchscreen Interface grid. This creates a new button. Icons can be added to buttons by dragging an image from the asset manager onto a row in the grid.

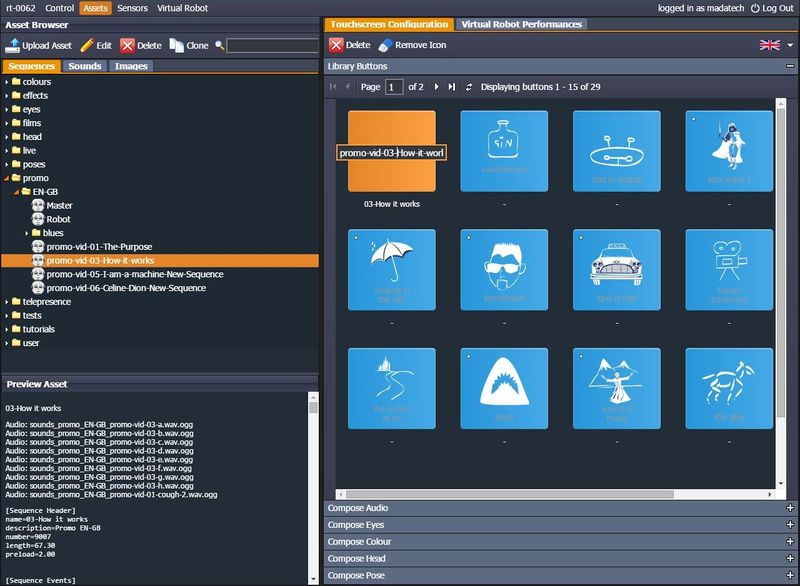

For example click on a desired sequence in the Asset Browser:

Drag the sequence into the "Library" at the desired location:

You can edit the label that appears on the library screen by clicking on the title:

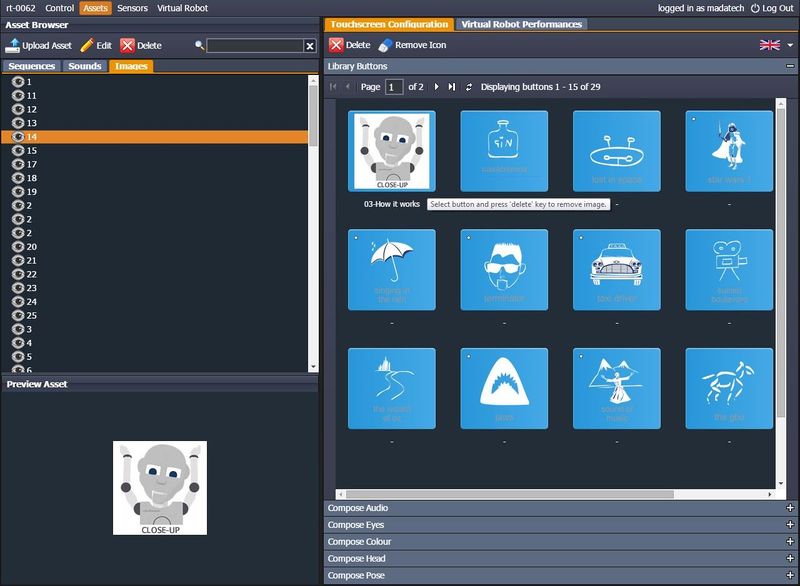

You can add an icon from the Images tab. Switch to images, browse to the desired icon and drag in place. This can be your own image.

Importing content from Virtual RoboThespian

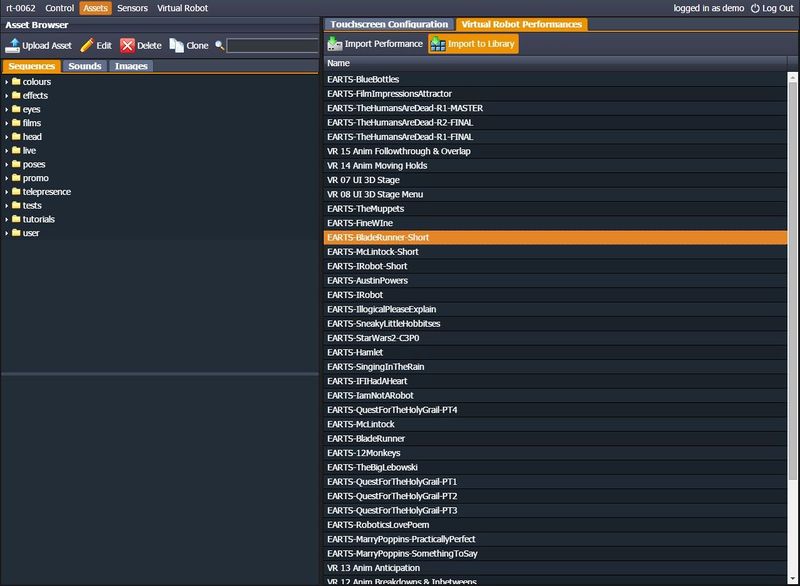

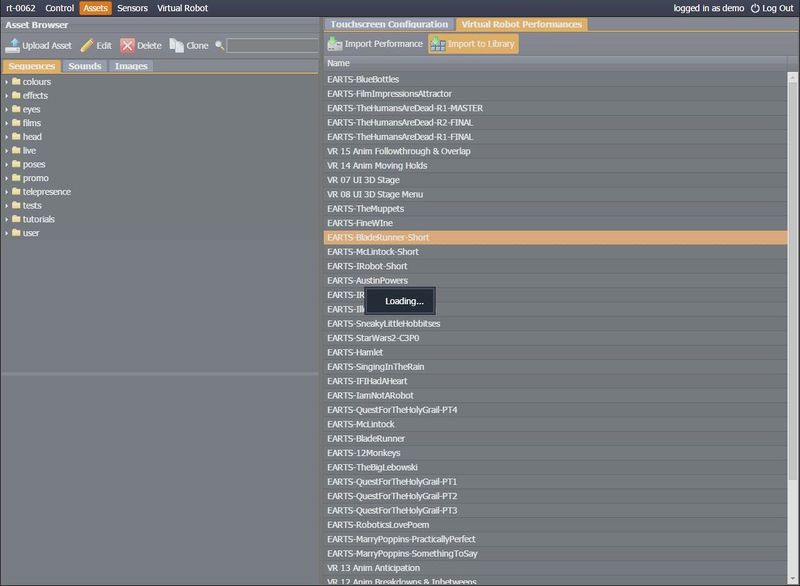

To import content generated with Virtual RoboThespian onto your real RoboThespian, click on the "Virtual Robot Performances" tab

Any performances in your Virtual RoboThespian account will be listed.

Select the performance to import, then click "Import to Library" to add the performance to the library in one click.

A loading message will appear for a few moments. Once imported a message will confirm.

Your sequence will be imported to the first button of the Library Buttons:

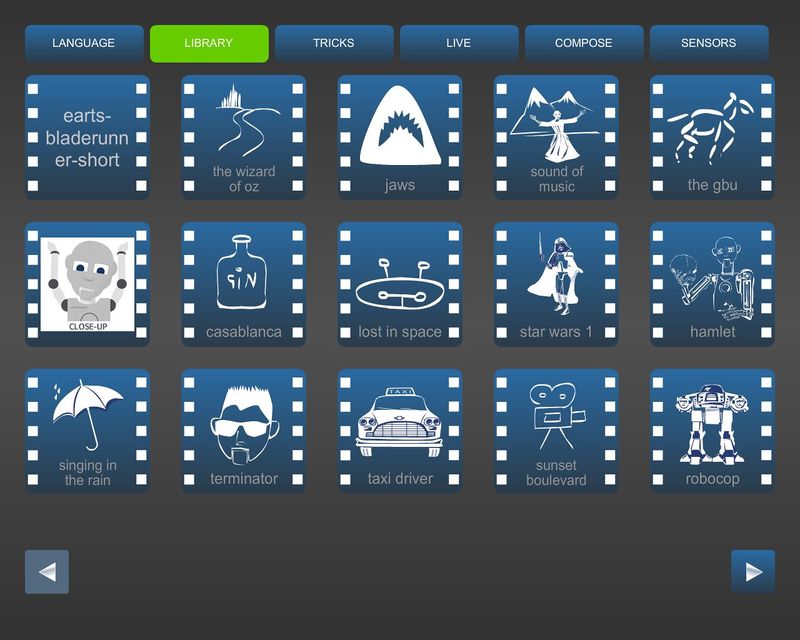

You will see the change on the touchscreen as well. On the touchscreen switch to another tab e.g. LIVE and then back to LIBRARY to load the new configuration:

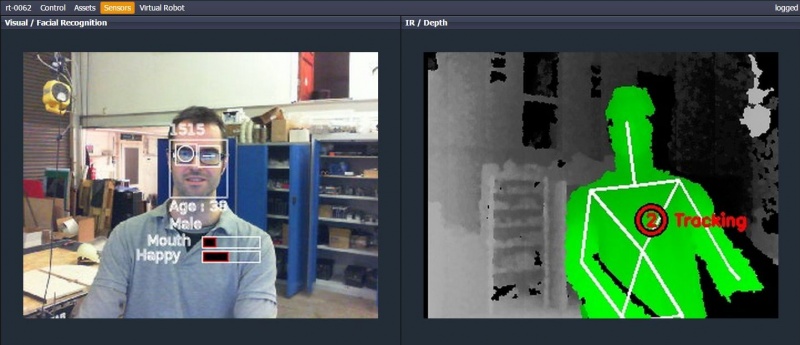

Sensors view

The sensors tab displays output from a selection of RoboThespian's built-in sensors. By default, this display includes the video feeds from the webcam in the head and the infra-red depth sensor in the chest. Here you will also be able to see a visual representation of the SHORE and OpenNI data used by the robot. The former detects faces, and assigns an age, gender and emotion estimate to them, which are displayed on the sensor screen (as seen on the left in the below image). The latter detects people within the IR range of the chest sensor and attempts to determine the pose of their body skeleton (seen on the right).